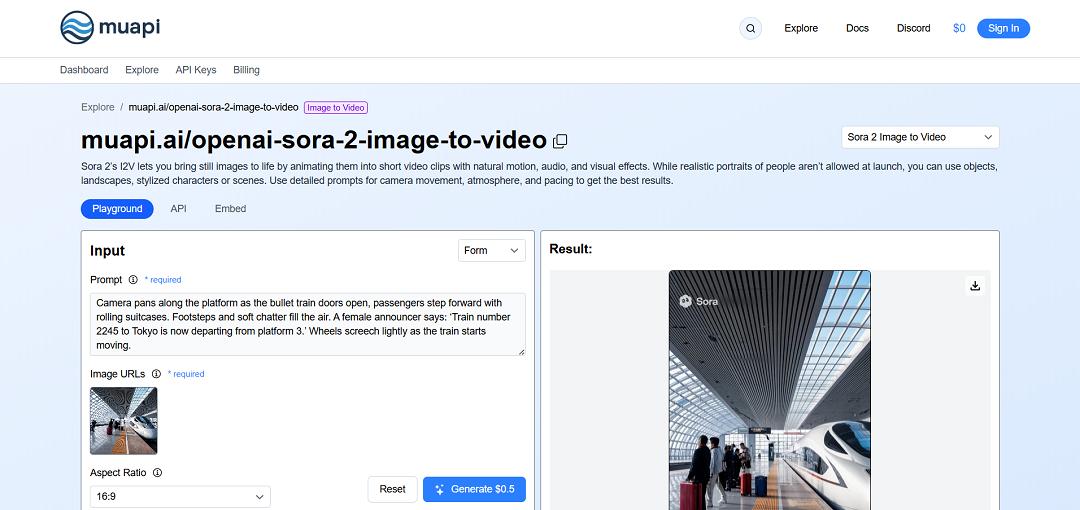

The OpenAI Sora 2 Image-to-Video feature on MuAPI AI enables users to transform static images into dynamic video content, offering a powerful tool for creators seeking to animate still visuals. By uploading an image and providing a descriptive prompt, users can guide the model to generate cinematic effects such as camera movements, subject motion, ambient enhancements, and even synchronized audio. While the model currently restricts realistic human portraits, it is well-suited for landscapes, objects, or stylized characters, making it ideal for promotional content, storytelling, or creative visual projects. The platform emphasizes precise prompt design, allowing users to specify actions such as “camera dolly backwards” or “fade into sunrise,” ensuring that the animation aligns with the creator’s vision.

Sora 2 is part of a broader ecosystem of generative tools on MuAPI AI, including text-to-image, image-to-image, and text-to-video models, providing a seamless workflow for various creative needs. Additionally, the Pro variant of Sora 2 offers enhanced fidelity, more realistic lighting, and smoother motion, catering to professional-grade content production. Overall, the Sora 2 Image-to-Video tool represents a significant advancement in bridging the gap between static imagery and engaging video content, empowering creators, social media marketers, and storytellers to bring their visuals to life with minimal effort. Its user-friendly interface and flexibility make it a practical solution for producing short-form cinematic videos while maintaining creative control over motion and style.